The Algorithm Whisperer: Our Newest SLM Specialist

Why we trained a 7B model that speaks both pseudocode and C++

"The distance between 'I understand the algorithm' and 'I wrote the correct C++' is where most implementations die."

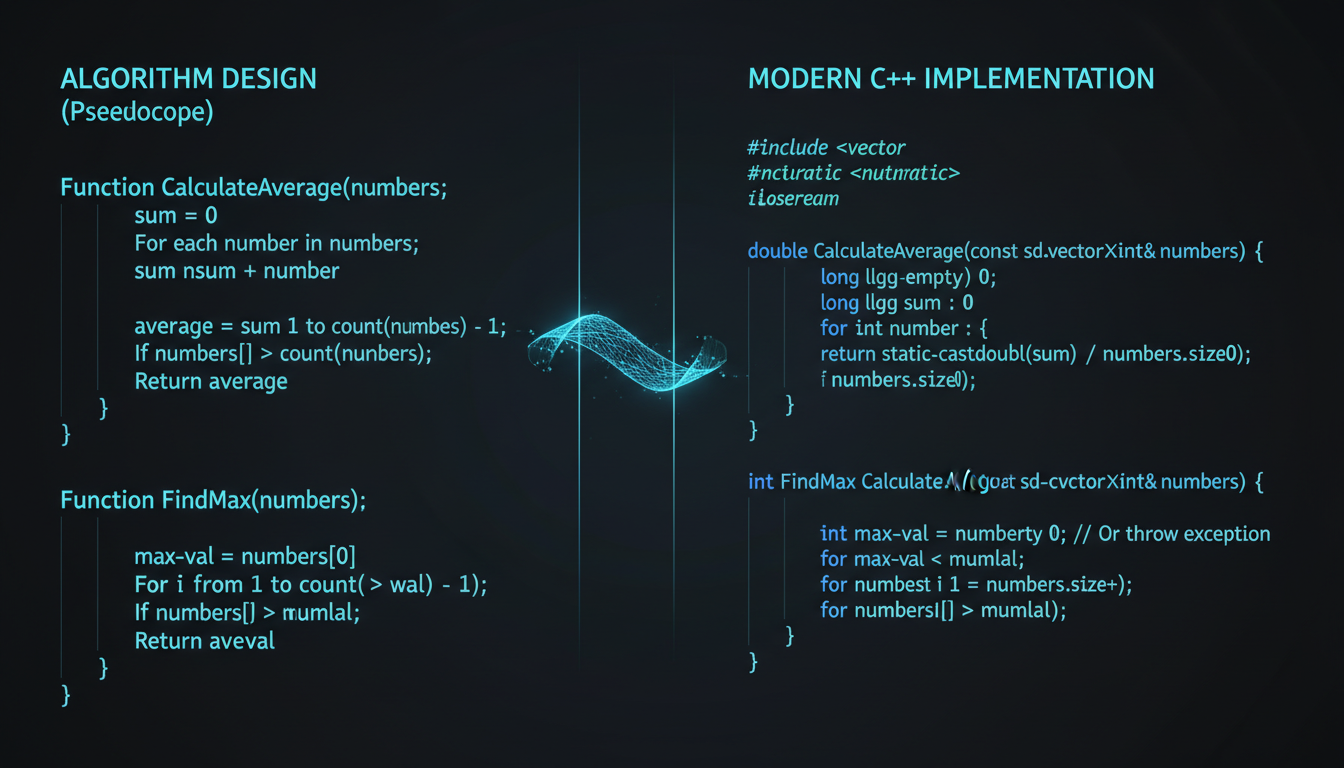

Here's something nobody talks about: the hardest part of implementing algorithms isn't understanding the algorithm. It's translating from the beautiful, hand-wavy pseudocode in your textbook to the cranky, type-checking, memory-managing reality of C++.

You've seen it a thousand times. The algorithm textbook shows you Dijkstra's shortest path in clean pseudocode: for each vertex v in graph...Then you sit down to implement it in C++ and suddenly you're drowning in questions.std::priority_queue or std::set? Wait, do I need std::greater or std::less? How do I handle the adjacency list? Should this be a reference or a copy? Is this move-constructible?

Most LLMs can't help you here. They'll give you code that compiles but uses the wrong data structure. Or code that works but runs in O(n²) when it should be O(n log n). They don't understand the why behind algorithm design—they just pattern-match syntax.

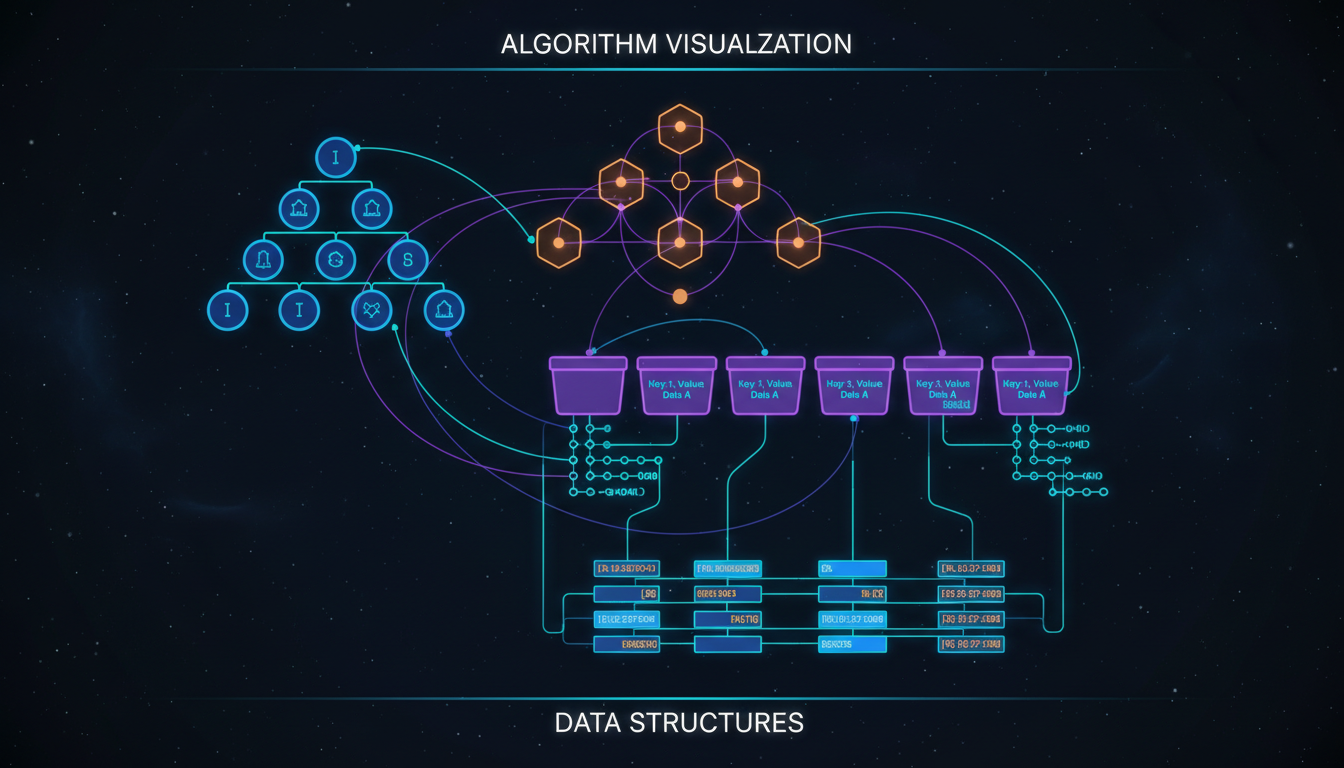

So we built a model that understands both worlds. Meet Algorithm SLM(Algo-7B): our 8th specialist, trained to be fluent in pseudocode, complexity analysis, and idiomatic C++ implementations. Think of it as a bridge between algorithm theory and production code.

1. The Pseudocode Problem Nobody Solves

Pseudocode is the universal language of algorithms. Every textbook uses it. Every paper uses it. Every whiteboard interview uses it. But it's also a lie—a beautiful, seductive lie that hides all the messy implementation details.

Exhibit A: Textbook Pseudocode

function BinarySearch(array, target):

left = 0

right = length(array) - 1

while left <= right:

mid = (left + right) / 2

if array[mid] == target:

return mid

else if array[mid] < target:

left = mid + 1

else:

right = mid - 1

return -1 // not foundBeautiful. Clean. Everyone understands this. But now implement it in C++...

The C++ Reality Check

template<typename RandomIt, typename T,

typename Compare = std::less<>>

std::optional<typename std::iterator_traits<RandomIt>::difference_type>

binary_search(RandomIt first, RandomIt last, const T& target,

Compare comp = Compare{}) {

auto left = first;

auto right = std::prev(last);

while (left <= right) {

// Wait, overflow! Should be:

auto mid = left + std::distance(left, right) / 2;

if (*mid == target) {

return std::distance(first, mid);

} else if (comp(*mid, target)) {

left = std::next(mid);

} else {

right = std::prev(mid);

}

}

return std::nullopt;

}Suddenly we're dealing with templates, iterators, iterator arithmetic, comparison functors, optional return types, and the subtle difference between std::nextand std::prev. Oh, and we fixed the integer overflow bug that the pseudocode completely ignores.

This gap is why algorithm courses and production code feel like different universes. The pseudocode doesn't tell you:

- Should this be a template or a concrete function?

- What iterator category do I need? (ForwardIterator? RandomAccessIterator?)

- How do I handle const-correctness?

- When should I use

std::move? - What's the right return type for "not found"? (exception? optional? iterator?)

- Do I need

noexceptspecifications?

This is where Algo-7B comes in. It doesn't just translate syntax—it understands the semantic mapping between algorithm concepts and C++ idioms.

2. Training a Model That Speaks Both Languages

Algo-7B is trained on 170 billion tokens from a very specific curriculum. Not random code from GitHub— carefully curated pairs of algorithm descriptions, pseudocode, complexity analysis, and correct implementations.

Training Data Sources

1. Algorithm Textbooks (Digitized)

Cormen et al. (CLRS), Sedgewick, Skiena, Knuth. We extracted pseudocode, complexity proofs, and worked examples. These form the "ground truth" for algorithm understanding.

2. Competitive Programming Solutions

Codeforces, TopCoder, LeetCode (with permission). These provide real-world algorithm implementations with time/space constraints. Crucially, they include failed solutions with time limit exceeded (TLE) or wrong answer (WA)—teaching the model what doesn't work.

3. Academic Papers (Algorithm Sections)

We mined algorithm descriptions from papers in arXiv, ACM, IEEE. These often include novel algorithms with detailed pseudocode and complexity analysis. Excellent for teaching the model how to reason about new, unseen algorithms.

4. STL Source Code

GCC's libstdc++, LLVM's libc++, Microsoft's STL. Reference implementations of standard algorithms. The model learns how expert C++ developers implement classic algorithms (spoiler: with lots of iterator magic and template metaprogramming).

5. Data Structure Libraries

Boost.Graph, LEMON, custom implementations from production codebases. Real-world examples of complex data structures (B-trees, segment trees, persistent data structures).

The Paired Training Approach

The key innovation: we don't just train on code. We train on pairs:

- Pseudocode → C++: Given pseudocode, generate idiomatic C++ implementation

- C++ → Pseudocode: Given C++ code, extract the algorithm in pseudocode

- Description → Implementation: Natural language algorithm description to code

- Code → Complexity Analysis: Analyze time/space complexity of given code

- Inefficient → Optimized: Take O(n²) code, suggest O(n log n) alternative

This bidirectional training ensures the model doesn't just memorize patterns—it understands the underlying algorithm semantics.

3. What Can Algo-7B Actually Do?

Let's get concrete. Here are the kinds of queries Algo-7B handles well:

Query: "Implement quicksort with 3-way partitioning in modern C++"

→ Model generates code using std::partition andstd::nth_element, explains when 3-way partitioning helps (many duplicates), and suggests std::sort for most cases (better constant factors).

template<typename RandomIt, typename Compare = std::less<>>

void quicksort_3way(RandomIt first, RandomIt last, Compare comp = {}) {

if (std::distance(first, last) <= 1) return;

auto pivot = *first;

auto eq_begin = std::partition(first, last,

[&](const auto& x) { return comp(x, pivot); });

auto eq_end = std::partition(eq_begin, last,

[&](const auto& x) { return !comp(pivot, x); });

quicksort_3way(first, eq_begin, comp);

quicksort_3way(eq_end, last, comp);

}Query: "What's the complexity of this code?"

for (int i = 0; i < n; i++) {

for (int j = i; j < n; j *= 2) {

process(j);

}

}→ Model correctly identifies this as O(n log n): outer loop runs n times, inner loop doubles j each iteration (logarithmic). Crucially, it doesn't get tricked by the nested loops— many models incorrectly say O(n²).

Query: "Convert this graph BFS to use STL containers efficiently"

→ Model suggests std::deque for the queue (notstd::queue—unnecessary wrapper),std::vector<bool> for visited (space-efficient), and std::vector<std::vector<int>> for adjacency lists (cache-friendly).

Query: "Why is my Dijkstra's implementation slow?"

std::vector<int> dist(n, INT_MAX);

for each vertex u not yet visited:

v = vertex with minimum dist[v]

for each neighbor w of v:

relax(v, w)→ Model identifies the O(n²) "scan for minimum" and suggests usingstd::priority_queue or std::setto achieve O((V + E) log V). Provides working code with both options and explains the tradeoff (priority_queue is faster but doesn't support decrease-key directly).

4. The Complexity Oracle: Beyond Big-O Handwaving

One of Algo-7B's superpowers is complexity analysis. Not just parroting "this is O(n log n)"— actually reasoning through the analysis step by step.

Example: Nested Loop Analysis

Give it this code:

void mystery(int n) {

for (int i = 1; i <= n; i *= 2) {

for (int j = 0; j < i; j++) {

process();

}

}

}Algo-7B's response:

Analysis:

The outer loop runs log n times (i doubles each iteration: 1, 2, 4, 8, ..., n).

The inner loop runs i times for each iteration of the outer loop.

Total operations: 1 + 2 + 4 + 8 + ... + n = 2n - 1 (geometric series)

Time Complexity: O(n)

Not O(n log n)—even though there are two nested loops, the inner loop's iteration count depends on the outer loop's variable.

Beyond Asymptotic Analysis

But Algo-7B goes further. It also considers:

- Cache behavior: "This algorithm has poor locality— consider blocking or tiling"

- Practical constants: "Merge sort is O(n log n) but has worse constant factors than quicksort for small arrays"

- Space complexity: "This is O(n) space but you could make it O(1) with in-place modification"

- Amortized analysis: "Vector push_back is O(1) amortized, not O(1) worst-case"

5. The Data Structure Whisperer

Ask Algo-7B "what data structure should I use?" and you won't get a generic answer. It considers your access patterns, insertion/deletion frequency, memory constraints, and cache behavior.

Query: "Fast lookups, rare insertions"

→ std::vector with std::lower_bound

"If insertions are rare, keep a sorted vector. Binary search is O(log n), cache-friendly, and faster than std::set for small-to-medium sizes. Use std::upper_bound for insertions (O(n) but rare)."

Query: "Frequent insertions/deletions, no ordering"

→ std::unordered_set or std::unordered_map

"Hash table with O(1) average operations. Provide a good hash function. Consider robin-hood hashing or open addressing if you need predictable performance."

Query: "Range minimum queries"

→ Segment tree or sparse table

"For static arrays: sparse table (O(n log n) preprocessing, O(1) query). For updates: segment tree (O(log n) update and query). Provides implementation templates for both."

Query: "Priority queue with decrease-key"

→ std::set or Fibonacci heap

"std::priority_queue doesn't support decrease-key. Use std::set (O(log n) operations) or implement a Fibonacci heap if you need O(1) amortized decrease-key (rare in practice)."

6. How Algo-7B Fits in the Ensemble

Algo-7B is our 8th specialist, and it doesn't work alone. The orchestrator routes algorithm-heavy queries to Algo-7B, but often coordinates with other models:

Scenario: "Implement Dijkstra's algorithm and profile it"

Route: Algo-7B generates the implementation → Performance Optimizer (from our earlier models) suggests SIMD optimizations for distance updates → Debug SLM adds profiling instrumentation

Scenario: "Why is my graph traversal crashing?"

Route: Debug SLM analyzes the crash (iterator invalidation) → Algo-7B suggests the correct algorithm pattern (BFS with stable iterators) → CodeGen writes the fixed implementation

Scenario: "Design a cache-oblivious B-tree"

Route: Algo-7B explains the cache-oblivious B-tree algorithm → Design SLM suggests the class structure and API → Template Parser (from our SLM ensemble) handles the template metaprogramming for custom allocators

The ensemble is more powerful than any individual specialist. Algorithm design doesn't happen in a vacuum—it needs debugging, optimization, and integration with real codebases.

7. Technical Specifications

Model Architecture

Parameters

- Total: 7B parameters

- Active (MoE): 1.4B parameters per forward pass

- Experts: 128 routed + 1 shared

- Activation ratio: ~10%

Training

- Tokens: 170B

- Precision: FP16 (training), NVFP4 (inference)

- Optimizer: Muon (not AdamW)

- Compute: 8x B200 GPUs, ~60 hours

Layer Stack

Like our other specialists, Algo-7B uses a hybrid architecture:

- Regular layers: Standard transformer attention for reasoning

- TE layers: NVIDIA Transformer Engine for mixed precision

- Mamba 3 TE layers: State-space models for O(n) long-context (algorithm proofs can be long)

- MoE routers: Dynamic expert selection based on query type

Tokenizer Notes

Algo-7B's tokenizer is partially compatible with our other models but includes special tokens for:

<PSEUDOCODE>and</PSEUDOCODE>markers- Big-O notation:

O(n),O(log n), etc. as single tokens - Common algorithm names: "quicksort", "mergesort", "dijkstra" (single tokens)

- Data structure names: "heap", "stack", "tree" (single tokens)

This tokenization makes the model more efficient when reasoning about algorithms—fewer tokens means more context fits in the window.

Why This Matters

Algorithms are the foundation of software engineering. But the gap between understanding an algorithm and implementing it correctly in production code is huge. Most developers struggle here—not because they're bad at algorithms, but because the translation layer (from pseudocode to C++) is full of gotchas.

Algo-7B is our answer to this problem. It's not trying to replace algorithm textbooks or competitive programming practice. It's a tool to help you move faster from "I understand this algorithm" to "I wrote correct, efficient C++ that actually works."

And because it's a 7B specialist (not a 70B generalist), it runs on your local workstation. You don't need cloud APIs or GPU clusters. Just a GB10 GPU and you're set.

Meet the full ensemble

Algo-7B is our 8th specialist. Together with C++, CMake, Debug, Shell, Orchestration, Design, and Review SLMs, we cover every aspect of C++ development.

Explore the SLM Ensemble →Try These Prompts

"Convert this pseudocode to modern C++ with STL algorithms"

"What's the time complexity of my recursive function?"

"Suggest a better data structure for this use case"

"Optimize this O(n²) solution to O(n log n)"

"Explain the algorithm in this code and suggest improvements"