Engineering Blog

Technical deep dives from the WEB WISE HOUSE team. No marketing fluff—just honest takes on training infrastructure, vector databases, code search, and what it actually takes to build AI for C++ engineers.

84 articles covering vector search, SLM architecture, and production ML systems

MongoDB Atlas Vector Search: Jack of All Trades?

When your document database decides it can do vectors too. A first-principles look at Atlas Vector Search, where it shines, and where you should probably use something else.

Elasticsearch kNN: The Search Engine Strikes Back

When your existing Elastic cluster learns vector search. A practical guide to dense vectors in ES, hybrid search, and when the old dog's new tricks are actually impressive.

The Latent Bridge: How Our 8 SLMs Talk Without Words

Why we ditched token-based inter-model communication for direct semantic vector channels. Inspired by recent research on cross-model latent bridges, our 8 specialist SLMs now share meaning at latent speed.

The Birth of Vector Search: From Euclidean Dreams to FAISS Reality

How Facebook's internal needs birthed vector search, the early days of FAISS, and the wild west before dedicated vector databases existed.

When Approximate Became Good Enough: The ANN Revolution

Why 99% recall changed everything, the tradeoff that enabled billion-scale search, and how LSH and HNSW rewrote the rules of similarity search.

The 2019 Vector Database Explosion: How Pinecone, Milvus, and Weaviate Changed the Game

Why 2019 was the pivotal year when vector search transitioned from research libraries to managed services. The founding of Pinecone, Milvus going open-source, and Weaviate's semantic approach.

FAISS vs. The World: Why We Still Build on Facebook's Foundation

Technical deep dive into why FAISS remains relevant despite the vector database explosion. Raw performance, algorithmic depth, and why we built FAISS Extended instead of starting from scratch.

The Great Embedding Explosion of 2022: When Everything Became a Vector

How text-embedding-ada-002 changed the vector database landscape forever, creating new scale requirements that existing solutions couldn't handle.

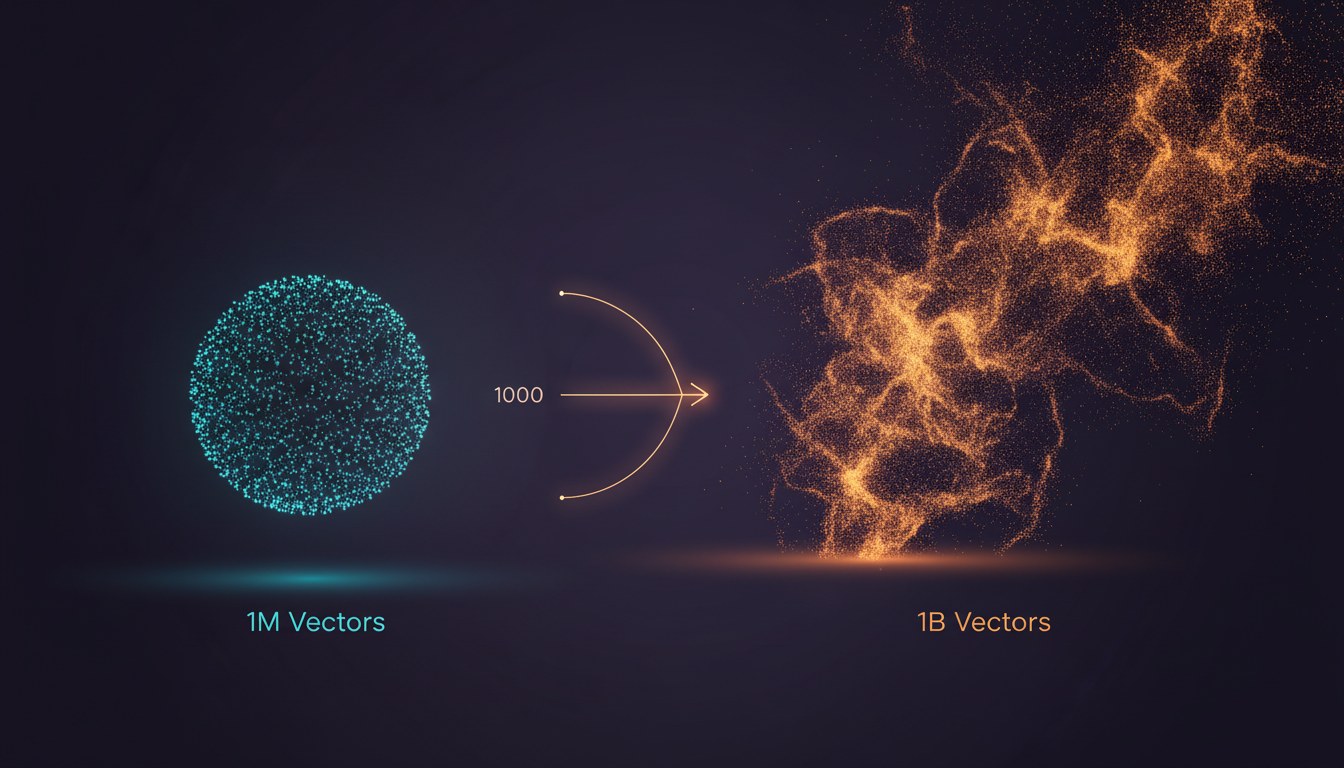

From 1M to 1B Vectors: The Scaling Crisis That Created MLGraph

Why existing vector database solutions couldn't handle our scale requirements, what we learned building around their limitations, and how it led us to create MLGraph.

Want to learn more about our work?

Check out our product documentation or get in touch to discuss how we can help with your C++ AI needs.